RAID 10 is not the same as RAID 01.

This article explains the difference between the two with a simple diagram.

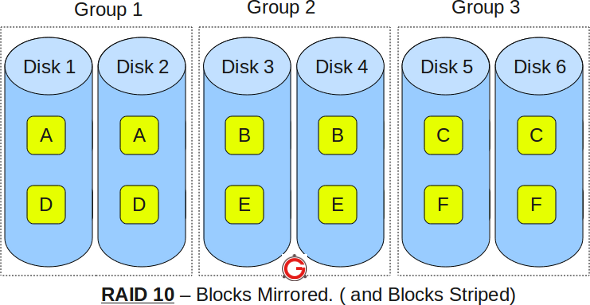

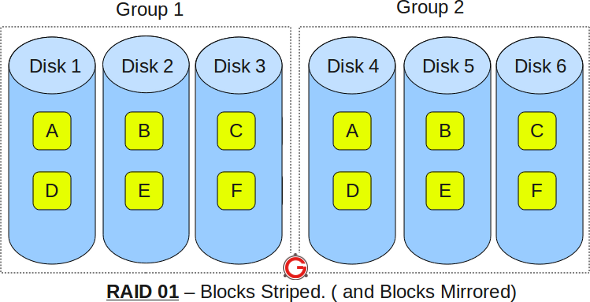

I’m going to keep this explanation very simple for you to understand the basic concepts well. In the following diagrams A, B, C, D, E and F represents blocks.

RAID 10

- RAID 10 is also called as RAID 1+0

- It is also called as “stripe of mirrors”

- It requires minimum of 4 disks

- To understand this better, group the disks in pair of two (for mirror). For example, if you have a total of 6 disks in RAID 10, there will be three groups–Group 1, Group 2, Group 3 as shown in the above diagram.

- Within the group, the data is mirrored. In the above example, Disk 1 and Disk 2 belongs to Group 1. The data on Disk 1 will be exactly same as the data on Disk 2. So, block A written on Disk 1 will be mirroed on Disk 2. Block B written on Disk 3 will be mirrored on Disk 4.

- Across the group, the data is striped. i.e Block A is written to Group 1, Block B is written to Group 2, Block C is written to Group 3.

- This is why it is called “stripe of mirrors”. i.e the disks within the group are mirrored. But, the groups themselves are striped.

If you are new to this, make sure you understand how RAID 0, RAID 1 and RAID 5 and RAID 2, RAID 3, RAID 4, RAID 6 works.

RAID 01

- RAID 01 is also called as RAID 0+1

- It is also called as “mirror of stripes”

- It requires minimum of 3 disks. But in most cases this will be implemented as minimum of 4 disks.

- To understand this better, create two groups. For example, if you have total of 6 disks, create two groups with 3 disks each as shown below. In the above example, Group 1 has 3 disks and Group 2 has 3 disks.

- Within the group, the data is striped. i.e In the Group 1 which contains three disks, the 1st block will be written to 1st disk, 2nd block to 2nd disk, and the 3rd block to 3rd disk. So, block A is written to Disk 1, block B to Disk 2, block C to Disk 3.

- Across the group, the data is mirrored. i.e The Group 1 and Group 2 will look exactly the same. i.e Disk 1 is mirrored to Disk 4, Disk 2 to Disk 5, Disk 3 to Disk 6.

- This is why it is called “mirror of stripes”. i.e the disks within the groups are striped. But, the groups are mirrored.

Main difference between RAID 10 vs RAID 01

- Performance on both RAID 10 and RAID 01 will be the same.

- The storage capacity on these will be the same.

- The main difference is the fault tolerance level. On most implememntations of RAID controllers, RAID 01 fault tolerance is less. On RAID 01, since we have only two groups of RAID 0, if two drives (one in each group) fails, the entire RAID 01 will fail. In the above RAID 01 diagram, if Disk 1 and Disk 4 fails, both the groups will be down. So, the whole RAID 01 will fail.

- RAID 10 fault tolerance is more. On RAID 10, since there are many groups (as the individual group is only two disks), even if three disks fails (one in each group), the RAID 10 is still functional. In the above RAID 10 example, even if Disk 1, Disk 3, Disk 5 fails, the RAID 10 will still be functional.

- So, given a choice between RAID 10 and RAID 01, always choose RAID 10.

My name is Ramesh Natarajan. I will be posting instruction guides, how-to, troubleshooting tips and tricks on Linux, database, hardware, security and web. My focus is to write articles that will either teach you or help you resolve a problem. Read more about

My name is Ramesh Natarajan. I will be posting instruction guides, how-to, troubleshooting tips and tricks on Linux, database, hardware, security and web. My focus is to write articles that will either teach you or help you resolve a problem. Read more about

Comments on this entry are closed.

Something is still out of my sight… if performance, sorage and # of disks (=~cost) are the same, and RAID10 is better in fault tolerance than RAID01, why should any vendor offer RAID01? Is someone offering it?

Or there is some topic where RAID01 is better than RAID10?

oh, I almost forget: YOUR BLOG IS EXCELLENT. Thank you.

Hi Ramesh,

If disk1 and disk2 both failed in RAID10, then what will be happend?

I’m sorry, but your logic doesn’t hold here.

You wrote – “In the above RAID 01 diagram, if Disk 1 and Disk 4 fails, both the groups will be down. So, the whole RAID 01 will fail.” Well, that’s also true for the RAID 10 diagram you presented. If disks 1 and 2 fail, your entire RAID is down.

Likewise you wrote – “In the above RAID 10 example, even if Disk 1, Disk 3, Disk 5 fails, the RAID 10 will still be functional.” Again, that also holds true for your RAID 01 diagram. Disks 1, 2 and 3 can all fail in RAID 01 and your system will remain functional.

It’s an issue of probabilities. In both cases, each block exists on two physical drives. The system will fail any time a block becomes unavailable, regardless of grouping. Likewise, the system will remain functional if all blocks are available, regardless of how many drives have failed – assuming your structure can retrieve the unavailable blocks from the other drive on which it resides.

Hi,

thanks a lot

i think raid 5 is the best one…

Hi !

I made the maths with my wife (safety expert) :

– ‘f’ is the failure probability of one single disk.

– Gx is the name of the groups

– Dx is the name of the disks

RAID 10 :

For loosing your file, you need to loose G1 OR G2 OR G3. To loose G1, you need to loose D1 AND D2, to loose G2 you need to loose D3 AND D4 and to loose G3 you need to loose D5 AND D6.

=> probability of loosing your file : (f*f)+(f*f)+(f*f) = f²+f²+f² = 3f²

RAID 01 :

For loosing your file, you need to loose G1 AND G2. To loose G1 you need to loose D1 OR D2 OR D3, and to loose G2 you need to loose D4 OR D5 OR D6.

=> probability of loosing your file : (f+f+f)*(f+f+f)=3f*3f=9f²

In this particular case (6 blocks, 6 disks), you have 3 times more chances to loose your file on RAID01 than on RAID10.

More basically, you can think like that :

– on RAID 10, if one disk fails, when the second failure appears, I have 1 possibility between 5 that this makes my entire system fail (the other disk in the group)

– on RAID 01, if one disk fails, when the second failure appears, I have 3 possibilities between 5 that this makes my entire system fail (any disk in the other group)

The Intel RAID controller in my system (Gigabyte X58A-UD7 motherboard) shows the RAID as RAID 10 and then (RAID0+1) shortly there after while the machine is booting up, and then the Intel RST software shows RAID10 while in Windows. I’m honestly not sure which I have at this point. I’ll just assume I have RAID10 and hope for the best. =)

Ken, I agree the logic is flawed.

gUI, you may have done the math but you are following the flawed logic.

In either of the setups if disk 1 fails you lose the redundancy on blocks A and D, so in order to have a complete failure you would have to lose the other disk that contains the A and D blocks.

In the case of RAID 10 it would be losing disk 1 and 2, in the case of RAID 01 it would be losing disks 1 and 4.

Both have the same probability after loss of the first disk. 1 out of 5.

Hi Ramesh,

In Raid10 section, the last bullet says:

This is why it is called “stripe or mirrors”. i.e the disks within the group are mirrored. But, the groups themselves are striped.

But I think you try to say “stripe of mirror”.

@ramsee,

Thanks for pointing it out. It is corrected now.

@weebl

No, in 01, the problem is the group. If you loose disk 1, group1 is dead, it won’t help you even for getting pieces C,D,E or F. So if the next failure is on the group 2, whatever the disk, the group 2 also is dead, and you won’t get any file.

The disks are not independants.

@gUi thanks for clarifying. It makes sense now, and re-reading the article i see the auhor also states the same thing. I agree RAID 10 is better.

Comparing raid10 and raid01 seems easier with

the same number of disks (4) for each raid:

– raid10: 2 disks in each group for mirroring,

2 groups for stripping

(D1G1 D2G1, D1G2 D2G2)

– raid01: 2 disks in each group for stripping,

2 groups for mirroring

(D1G1 D1G2, D1G1 D2G2).

Then, complete failure if:

– raid 10: if 2 disks of one or more group failed

((D1G1 and D2G1) and/or (D1G2 and D2G2))

– raid 01: if 2 disks of one or more mirror failed

((D1G1 and D1G2) and/or (D2G1 and D2G2)).

But probability of “((D1G1 and D2G1) and/or (D1G2 and D2G2)) failed”

is equal to

probability of “((D1G1 and D1G2) and/or (D2G1 and D2G2)) failed”.

And then raid10 == raid01.

@Jidifi

For 2 disks, maybe it’s the same (don’t want to redo the maths), but for more, it’s no more equivalent. Please re-read carefully all comments.

@gUI

In your first comment, you write:

“RAID 10 :

For loosing your file, you need to loose G1 OR G2 OR G3. To loose G1, you need to loose D1 AND D2 …”.

Question:

“RAID 10 :

If you lose D1 only (or D2 only), have you lost your file ? “

@gUI

In your first comment, you write:

<

< – ‘f’ is the failure probability of one single disk.

< RAID 10 :

probability of loosing your file : (f*f)+(f*f)+(f*f) = f²+f²+f² = 3f²

< RAID 01 :

probability of loosing your file : (f+f+f)*(f+f+f)=3f*3f=9f²

probability of loosing your file : 3 x 0.8 x 0.8 = 1.92 !!

RAID 01 : => probability of loosing your file : 9 x 0.8 x 0.8 = 5.76 !!

gUI, probabilities greater than one do not exist in our world.

(Please re-read carefully all your first mathematical books)

I know, thank you very much… but why did you choose f=1 ? I think you also should have a look at your maths books…

Oups, no, you did not choose f=1, you choose 0.8, yes… But the formula still true. It just means that with a hard drive that have 80% of probability to fail in an hour, you are sure that you will loose your system. Not very interesting.

Now check with other value, more realistic, like 1/10e-4 (around one failure in one year).

Thanks a lot for this post. You described it very simple and easy to understand.

Very good article

Thanks to all for sharing their views

While the above definition are 100% correct it is important to recognise RAID 10 is a relatively new term and there are many Raid 10 implementation out there that call them selves Raid 0+1. As late as 2006/7 manifacturers were documenting raid 10 implementations as raid 0+1

Are you saying that (in raid 01 diagram) if disk 1 and disk 6 both fail, then the files cannot be reconstructed from disks 2, 3, 4, & 5, which together contain the complete set of blocks?

In answer to Karl, Yes in Raid 01 If disk 1 fails then the Stripe Group1 has failed, so the only data access available is via the Group 2 stripe. If any disk in Group 2 fails then both Groups have failed.

This is why RAID 10 is far superior to RAID 01 in terms of availablity.

For all the people that can’t get why 01 is worse here is a more detailed description:

The gist is basically that when a Drive fails in RAID 01 the whole Group fails. In the case above imagine failure of Disk1 then the whole Group1 is down and you’re left with only three disks that make up Group2. If ANY of them fails then I hope you have recent backups 🙂

As for the RAID 10 – it needs to lose two mirrored disks (for instance Disk1 and Disk2) in order to lose whole group (in this case Group1) so the RAID could fail. And the probability is quite smaller even without a math: with 01 you have simple mirror, when any of the drives fail you have only one group left, and if any of these drives fails while you’re replacing or rebuilding the RAID – it’s all gone.

A decent raid controller should be able to pick up that, if disk 1 and 5 had failed, it still had access to all data. It would basically piece the 2 raid 0’s together to make a working set of data.

The problem is that some of the low end raid controllers don’t have this level of intelligence.

Also, if you were to resort the drive order in the picture to show “Disk 1 and Disk 4, then Disk 2 and Disk 5, and then Disk 3 and Disk 6” you would still have the same raid 0, and you could see that data-wise, raid 10 and raid 01 should be the same; it’s just a question of how smart is the raid controller (to understand that all I need are blocks A to F, and it doesn’t matter which drive in which raid set they are on).

Dev Mgr, you miss the point of this discussion, if the Raid controller implements Raid 0+1 then it can not handle that failure mode if the raid controller implements Raid 10 then it can. It has nothing to do with level of intelligence of the controller or whether itis a low or high end controller, just the raid algorythm chosen. The selection of the latter has significant amounts to do with the level of intellience of the firmware developer but that said there can be good reasons to choose raid 0+1 over raid 10 !!!

I want to understand more the availability of the data. As Dev Mgr points out, depending on the failure mode/sequence of events, your file may actually still exist, but limitations in the RAID algorithm prevent you from accessing it.

Does this mean that with some manual intervention, e.g. physically juggling the drives and putting them in a certain sequence, one could restore their file for use once more? Once restored, further manual steps could be taken to restore your full striped mirror (or mirrored strip) and your RAID array of choice would once again by live?

I found this link looking for the benefit of RAID 10 vs. RAID 01, but using FOUR drives in total. With all things being equal, in a four-drive (2 pairs) array, RAID 01 & 10 should be equal. I’m running an older 3ware controller, and I’ve learned never to trust luck.

It seems to me that the redundancy depends on the controller you use.

If I do RAID 01, and one drive from each stripe fails, even if they weren’t holding the same data, I’m depending on the controller being smart enough to “marry” up one drive from each stripe to form a whole effective non-RAID stripe pair. In essence, to re-build one striped pair dynamically. That isn’t a bet I’m anxious to make!

With the fundamental element being a mirror, the controller can be a good deal stupider and the low-level mirrors still dutifully cough-up their contribution to the over-arching stripe, and my day isn’t ruined.

Since both 01 & 10 use BOTH striping and mirroring, they are likely to provide the same performance. So I’m going with RAID 10. (now if there were only some way to make it go to ELEVEN! 🙂

Grant, what you say may be possible, however in a Raid0+1 scenario you really only want to consider this if you had multiple simultaneous failures. The longer the period between the failure the more out of step the remaining good drives in the failed stripe get with the drives in the live stripe.

Whether manual intervention as you describe will work really depends on the Raid software (frimware), what controls it allows and what meta data it stores, do not assume the raid software will allow such activity.

Hugh, I’m affraid to say raid 0+1 and raid 10 are not the same and will NOT give to the same availablity.

In raid 0+1, when the first disk lost means the first strip in lost which means both disks in that stripe ater ejected from membership of the Raid volume and so the data is no longer mirrored at all.. At this point the data on the other member(s) in the stripe starts to become increasingly stale as it is no longer being updated. If either of the disks in the remaining stripe is loss the complete volume is lost… the controller can’t have the smarts to sort the issue out, we have lost data …

In Raid 10, when the first disk is lost, only 1 of the 2 mirrors is lost and the stripe between the mirrors is unaffected. So providng your next disk failure comes from a disk in the remaining good mirror then the stripe will still be good.

So if Raid0+1 has two disk failures, the volume is toast …

Raid 10 can tolerate at least 1 disk failure and potentially 2 if they are the “right disks” so raid 10 has better availablity,

Hi Laurence,

I’m stuck searching for a backup solution, wondering if you or any of the readers can help.

I have an industrial slim PC at a remote site, no internet connection is permissible (corporate regulation), and I’m nervous of hdd failure. If I could completely mirror the drive, even once a week, the local techs could simply swap the drives to get the system running again and then contact me for warranty support – this keeps downtime very low. The PC box does not have a RAID controller, and does not have room for a 2nd hdd in the box.

Does there exist an external mirror device that works in parallel to the primary disc, or perhaps an external 2 disc RAID 1 device that I can use instead of the internal disc (I’m picturing a 30cm IDE extension cord, so the PC thinks the RAID is internal)?

I prefer a hardware sol’n over software, simply because I cannot login and confirm the software is working at all times.

Thx

Please correct me if my logic is wrong, but it seems the math was made much more complicated that it needs to be. Here is my explanation:

Based on the diagrams for Raid 10 with 6 disks –

In Raid 10 if 2 disks fail the probability of complete failure is 1/5.

1st disk is probabiliy of 1 since it does not matter which disk fails first, and 2nd disk must be part of the same group of the first disk to fail. Therefore out of the remaining 5 and there is only 1 disk that can cause complete failure. Probability is 1 out of 5.

In Raid 01 if 2 disks fail the probability of complete failure is 3/5.

1st disk can be anything once again, and to cause complete failure the 2nd disk can be any of the 3 disks in the other group. Therefore out of the rest of the 5 disks, the probably is 3 out of 5.

Based on this for a 2 disk failing scenario Raid 10 is better. Writing out the probability for 3 disk failure would prove the same result.

The same applies for 4 disk, for Raid 10 probability of 2 disks failing to cause complete failure is 1/3 and for Raid 01 it is 2/3.

Hi Leon, your maths fails to take into account that in raid 0+1 after the first failure half the disks are no longer available (in the above example two good disks will have effectively been failed along with the bad disk).

In raid 0+1 the first disk failure fails all disks in that stripe, the failure of any subsequent disk means a member of the other stripe has failed and so data access is lost. Two disk failures = total data access losss

In raid 1+0 there is potential for loss of upto half the members and for data access to remain.

As stated earlier this is why RAID 1+0 is so superior to Raid0+1

Guys it’s a Simple logic don’t make it complex.

RAID 1+0 has a mirrored pair as its basic element. If a drive fails and is replaced, only the mirror needs to be rebuilt. In other words, the disk array controller uses the surviving drive in the mirrored pair for data recovery and continuous operation. Data from one surviving disk will be copied to the replacement disk.

Note: If there is a hot spare, data is rebuilt onto the hot spare from the surviving drive in the mirrored pair . When the failed disk is replaced, data from the surviving drive in the mirrored pair is used to rebuild the data on the replaced disk.

RAID 0+1 uses a stripe as its basic element. The stripe has no protection (RAID 0). If a single drive fails, the entire stripe is faulted, meaning that only half the disks in the RAID set are available for data access. A rebuild operation rebuilds the entire stripe, copying data from each disk in the healthy stripe to the equivalent disk in the failed stripe. This causes increased, and unneeded, I/O load on the backend, and also makes the RAID set more vulnerable to a second disk failure.

RAID 0+1 is less common than RAID 1+0, and is a poorer solution.

Hope above helps

Also, after disk failure in 0+1 you have to rebuild fully half of the disks in the array, which means you have to do a lot of reads on the other, non-failed half. You really end up putting the system through a large amount of disk I/O, and risking failures in other disks.

In 1+0 you’re only involving the one other disk in the affected mirror.

In case this has not been settled yet, here is every possibility for a 2 drive failure:

Drive R 0+1 R 1+0

1,2 Up Down

1,3 Up Up

1,4 Down Up

1,5 Down Up

1,6 Down Up

2,3 Up Up

2,4 Down Up

2,5 Down Up

2,6 Down Up

3,4 Down Down

3,5 Down Up

3,6 Down Up

4,5 Up Up

4,6 Up Up

5,6 Up Down

Raid 10 tolerance is not better, and here’s why. Let’s discuss the failure scenarios the article mentions:

Scenario #1: Disks 1 and 4 both fail simultaneously. The entire RAID 01 array would fail, but the RAID 10 array would survive.

My response: True, but if disk 1 and disk 2 both fail, RAID 10 fails, while RAID 01 survives. Since the chances of disks 1 and 2 both failing simultaneously are exactly the same as disks 1 and 4 doing the same, it’s a wash; RAID 10 provides no advantage.

Scenario #2: Disks, 1, 3, and 5 all fail simultaneously. According to the article, the RAID 01 array would fail, while the RAID 10 array would survive.

My response: NOT TRUE. Data on the RAID 01 array would NOT be lost. Why? Because the data on disks 2 and 5 are identical. So just take the (still working) disk 2, substitute it for disk 5 in the “group 2” array, and voila, the array is working again, with all data still intact.

So in conclusion, it doesn’t matter too much whether you do RAID 10 or RAID 01; the fault tolerance is the same either way.

@jake12 : in conclusion, you’re wrong 😀

RAID is not working in this way, that’s precisely why 01 has lower availability. As soon as you loose one disk (say #1), you’ll loose all the group (Group1), even if the other disks are still OK. That’s the implementation, you cannot change this.

So once this happens, if the next failre is on the other group, you’re dead (even if all data still available globally, I agree).

@gUI

I don’t think that’s true.

If one drive in a Raid 0 array dies you lose the “entire array” because it’s inacessible, but the data on the surviving drives doesn’t go anywhere. It’s not like the RAID controller says “Oh crap, a drive died; I’d better format all the other drives in the array just in case”

When a drive dies, the RAID 0 *ARRAY* dies, but the *DRIVES* themselves are still perfectly good, and all the data is still there. The only reason the array “dies” is because it needs all drives present in order to access the data, and if one drive is dead/missing, it can’t access the data on the other drives. But if you could somehow magically replace the failed drive with an identical drive with identical data (as is the case when the array is mirrored across another, identical RAID 0 array on the other side of the RAID 01), then the controller has no way of knowing that you just switched drives, and the array lives to see another day.

In that way, you are totally right. But you’ll have to switch off your system, modify the array structure, and stitch it back on. We can’t talk about availability of the system, and that’s why 10 is more reliable, meaning it guarantees a better availability of the service.

In case #2, your data are still there (you can retrieve them at the cost of a little effort), but the service is down.

This being said, I’m not sure that you can switch the drives between them and simply restart your RAID, I wonder if they are tagged or something. But even if this is the case, there will always be a way to retrieve your data, yes.

@gUI

So then I think we’re in agreement – Raid 10 provides no extra redundancy against catastrophic data loss, only against system uptime regarding how long it takes to swap drive slots and reboot the system.

But even then, that’s assuming that the RAID controller/driver/firmware can’t figure out that there are still enough “good” drives left in the array to get a 100% intact RAID array going. I’ll admit I don’t know a whole lot about server-level RAID controllers, but wouldn’t it be logical for the RAID card manufacturer to put this incredibly simple bit of programming into whatever function monitors the drive status? They’ve been putting all kinds of upgrades/improvements to RAID cards over the years (caching, battery backup, etc.), it wouldn’t very much trouble to make it so that a RAID 01 array had the same redundancy of a RAID 10, so that RAID noobs didn’t have to worry about how to set the array up.

Hi Jake12, I have to disagree because the drives failures are not likely to be simultaneous which means in a RAID0+1 model after the first drive failure the other two drives that are “marked” bad are no longer being updated which means very quickly you will not be able to re-consitute any file that is written to and there will be real data-consistency issues

Secondly the idea of re-constituting the data from the surviving drives may not work as some raid implementations will not allow such and action as they track the membership using meta-data store either on the drive or in the controller.

Regards, Laurence

@Laurence

Not a bad point. Theoretically, a RAID controller could alleviate this problem by continuing to update the other drives in the “failed” RAID 0 array, but the particular RAID controller device may or may not do this in practice.

So in theory, there should be no difference in redundancy between RAID 01 and RAID 10, but in practice, you should stick with RAID 10 just to play it safe.

> @gUI

>

> RAID 10 :

> For loosing your file, you need to loose G1 OR G2 OR G3. To loose G1, you need to loose D1 AND D2, to loose G2 you need to loose D3 AND D4 and to loose G3 you need to loose D5 AND D6.

> => probability of loosing your file : (f*f)+(f*f)+(f*f) = f²+f²+f² = 3f²

> RAID 01 :

> For loosing your file, you need to loose G1 AND G2. To loose G1 you need to loose D1 OR D2 OR D3, and to loose G2 you need to loose D4 OR D5 OR D6.

> => probability of loosing your file : (f+f+f)*(f+f+f)=3f*3f=9f²

You can’t add up probabilities like that because their events overlap. Also probabilities will never ever be higher than one if you do proper calculations.

Considering the first case RAID 10:

You are asking for the probability P(G1=d or G2=d or G3=d). To calculate this you could break this down to each _disjoint_ case:

(G1=d and G2=l and G3=l) or (G1=d and G2=d and G3=l) or (G1=d and G2=d and G3=l) or (G1=d and G2=l and G3=l) …

Here you could add each probability because their events are disjoint. But it is simpler to calculate P(G1=l and G2=l and G2=l) which is the only event left out, the opposite case.

Therefore the probability of P(G1=d or G2=d or G3=d) = 1 – P(G1=l and G2=l and G2=l) = 1-3(f-1)^2.

Another approach would be to negate the boolean expression “G1=d or G2=d or G3=d” which actually is “G1=l and G2=l and G2=l”.

If you now let f be 0.8 the result would be 1 – 0.2^2 = 99.2 %, which of course is within the range [0, 1].

Wait, i’m stupid. I messed up the probability for a group to be alive:

P(G=l) = 1-f^2

Therefore P(G1=l and G2=l and G3=l) is (1-f^2)^3. The hopefully correct formula is P(G1=d or G2=d or G3=d) = 1-(1-f^2)^3. Let f=.8 then the result is ~ 95.3 %.

Sorry for the confusion :/

To simplify the point good_weather is making, consider rolling six dice (regular, 6-sided cubic dice). What are the chances of rolling a “5” on at least one of them?

Let’s see, the chances of getting a 5 (or any other number) on a single die is 1 in 6, because there are 6 possible numbers. So the chances of getting a “5” on at least one of the six dice rolled is:

(1/6) + (1/6) + (1/6) + (1/6) + (1/6) + (1/6) = 6 * (1/6) = 1

So by that logic, you have a 100% chance of rolling at least one “5”, which is obviously wrong.

While it’s true that *on average* you’ll get a 5 on one of the six dice, you’re not guaranteed to have exactly one on every roll, because some rolls will produce more than one “5”, while others will produce none.

The easiest way to correctly calculate the odds, as good_weather points out, is to calculate the odds of getting *NO* 5’s; i.e. the odds of all six dice resulting on something *other* than a 5, which is easy. The odds of getting a non-5 roll on a single die is 5/6, so the odds of getting a non-5 roll on all 6 dice is (5/6)^6, or 15,625/46,656, or about 1 in 3.

So you have a 1 in 3 chance of NOT getting a 5, which means you have a 2 in 3 chance of rolling at least one. p(at least one) = 1 – p(none).

I think that simplifies it. Either that, or makes it more confusing ;P

Very Good Info Ramesh

Raid 10 is the same performance as Raid 1?

No.

gui@ – almost correct, but you made mistakes in calculations.

good_weather@ – correct, but still there are significant assumptions in your calculations.

Distinction between 10 and 01, matters only if you are using them in different layers. For example doing RAID 0 in software on top of hardware RAID 1. or reverse. Or using everything in software, but using two SATA/SAS controllers (each one for half of your drives). Then, loosing one of drives in 01, may mean whole group is unavailable (because hardware raid will not know about other group, or because whole group failed because of faulty controller, or faulty shared PSU), and then it is just a matter of loosing any of remaining disks in remaining group to loose whole array. However, if all disks are behind a one controller, and you are doing software raid or smart hardware raid, then there is no difference – each block is available on some 2 device, shuffling order of drives doesn’t matter.

Your analysis of failure probabilities for 10 and 01, assumes there exists dependency between disks in single group, but it is only true in some cases (as explained above). Still valid in many situations. As also explained above, when using 2 controllers or doing replication across machines – think about it. Is it better to have first mirror on 1 machine (server) and second mirror on 2 machine, and perform striping on client (raid 01)? Not really, if one of the machines dies, you are losing access to data, because having at least one drive in each machine is necessary. Contrast that with oposite. Strip non-mirrored drives on each machine, and mirror them (in client) across machines (raid 10)? Yes, even loosing whole single machine will make array still available to client. Also loosing some disks will be fine in such scenario, because you can choose which machine to use for each block.

real world working with 5x9s the array RAID theory is all change – classic RAID level is going … going nearly gone, chunklets as in HP 3PAR’s array are the way ahead, and in regard to the posting classic RAID 10 vs 01 – I would always put in two arrays and have layered either

A) array based replication

or

B) HBM (software raid) over the two arrays.

(separate fabrics of course). Within my SAN (2PB 14 arrays stretched over 2 sites), we have had a (multi disk failure) just (once) in three years (that was 2 disk). and the Hot spares kick in very fast so the exposure time is very low, and we run RAID 10.

As for asking the array to choose which RAID 10 or 01 – for the question I would go with suppliers best practice.

Claire

As all these attempts to clarify seem to have not worked, here is another try.

RAID 10 -> Each disk in a 3 disk striped array has its own mirror. If you lose D1, you must lose D4 to have failure. Losing D5 or D6 would be ok. You are guaranteed to have D1 = D4, D2 = D5, and D3 = D6.

RAID 01 -> A complete 3 disk striped array is mirrored to another 3 disk striped array. In this case, you are NOT guaranteed D1=D4, D2=D5 and D3=D6. If that were true, then you actually have RAID 10. In RAID 01 the stripe sets are independent of each other.

For example, files A, B, and C could be split into 3 stripes each – A1, A2, A3, B1,…. Now in the above illustrated setup for RAID 01, you can have the following:

D1 – A1, B1, C1 | D4 – A1, B2, C3

D2 – A2, B2, C2 | D5 – A2, B3, C1

D3 – A3, B3, C3 | D6 – A3, B1, C2

Each stripe set is free to stripe data any way it wants because the parts of the set are not individually mirrored (like they are in RAID 10. Again, if you know the sets are identical, it is RAID 10). Here, if you lose D1, then losing any of D4, D5, or D6 will cause failure. In other words, after losing 1 disk, RAID 01 is 3 times more likely to fail than RAID 10.

If you know the stripe sets are identical, it is a RAID 10. When you say RAID 01, you are implying that the stripe set pieces MAY NOT necessarily be identical.

When would anyone use RAID 01? Hopefully never, but suppose you have 6 disks and 2 four port RAID controller cards. You could set up RAID 10 with 3 RAID 1 sets (2 on one controller and 1 on the other) and then a software RAID 0 stripe set. Or you could set up RAID 01 with a 3 disk RAID 0 stripe set on each controller, and a software RAID 1. In this case there can be a slight performance advantage to RAID 01 because software RAID 1 uses fewer processor cycles than software RAID 0.

But just get a 6 port controller card and do everything (RAID 1+0) in hardware. Restoring anything after any kind of failure in a hardware + software RAID setup is a nightmare, and you have more points of failure.

Jason

@Jason

Brilliant !!! Thanks a lot 🙂

No Jason you’re totally wrong, but what describing is just simple clustering of random disks, same size or not. that’s not an actual raid 0, If you wanna cluster same sized disks and not actually stripe the data across like it should be go for it.

Witek is the only one correct so far. All of this should be assumed to be hardware array setups, otherwise how is this a comparison. I’d even go as far as to say the 0+1 obviously using hardware raid on same controller would be faster. Striping across several disks usually gives better performance. Your scenario is pretty dumb in the first place trying to compare 2 similar raid setups, but using odd/even number of groups. If you had 6 disks why are you doing 3 groups of Raid 1. I highly doubt striping that many Raid 1 groups would even benefit as much. please provide benchmarks to your completely illogical raid grouping.

But if only one of disks becomes defect, which is the best fault tolerance from 1+0 vs 0+1 ?

In this situation, so same in 1+0 same in 0+1, the array works the same or stops ?

Thanks

I would like to thank all the participants in this discussion for all the crucial information they have provided. With that said I agree the RAID 10 has a lower probability of failure of data recovery, that is the key, you don’t wait for another failure. The RAID controller is designed to rebuild the data after replacing the failed drive/s, right? Provided you have not had several critical drive failures at the same time, in the same group and at least one of each data set still exist the array is rebuilt upon drive replacement, am I incorrect? Lets say drive #1, #4 and #5 all fail at one time in the 6 disk RAID 10 example above, there is still a full data set, you replace the drives, rebuild the array and you have lost no data. Is this correct?

Im an HD Digital Video Editor who SERIOUSLY needs a good recommendation for an ext. hard drive [RAID]. I have a PC system. Long story short, I want a RAID 10 system if they have one [ext. HD]. Is there such thing?

[I haven’t been able to locate one] And if so, which is recommended?

If they don’t have one what is the next best thing??

HD files are HUGE & my current [lame] ext. hard drives perform extremely slow due to the video graphics and effects. :/

A quick, simple, legit solution would be EMMENSLY appreciated!

Thanks in advance guys 🙂

Sorry guys, but your whole discussion is amusing. Please check the difference between 1E, 0+1, 1+0, 5, 6 and mdadm’s true 10 raid-levels with its different near, far, offset options (which to mee seem relevant to more and more obsolete rotating rust).

Also check up btrfs and zfs and possibliy win2012 server and later storage pools.

Basically, in >2010 we know that block-level redundancy is 2000s, now we have multi-device filesystems per machine in different stages of maturity. Including SSD caching in hardware or via dedicated device… Let alone concepts like replicating all data n times on your different local machines…

To GeekThe Sneek, yes they do have external RAID 10 USB plug and play HD sets. Check TigerDirect I know they have them. Good Luck and happy Computing to all.

RAID 10

—1— —2—

A* A* B B

C* C* D D

RAID 01

—1— —2—

A* B A* B

C* D C* D

* Represents Drive Failures

I think it depends where the drive failes.In RAID 10,if both the drives in the same array fail,then its a complete failure of the RAID.

In RAID01,if 2 drives,one in each array fails,its a complete failure of the RAID.

@ryan

But therein lies the rub. You’re more likely to get a complete array failure in Raid 01 than in RAID 10. I’m not sure if your illustrated example uses 4 drives or 8 drives, but here’s an example using 4 drives for simplicity.

RAID 10

—1— —2—

—(RAID 0)—

***A*** ***B***

__^__ __^__

mirror mirror

—v— —v—

***C*** ***D***

Here, A and C are mirrored (RAID 1) on side 1 (left) of the array, and likewise for drives B and D on the right. Arrays #1 and #2 are then striped in a RAID 0 setup.

In this case, if one of the drives fails (say, drive A), then the entire could be lost if, and only if, drive C is the next drive to fail. Failure of drive B or D does not disable the array, since they interchangeable with one another thanks to being “mirrors” of one another. Thus, since only 1 drive (out of the 3 remaining) can disable the array, odds are 1 in 3 that the next drive failure will ruin the IT department’s day.

Now consider RAID 01. Same setup as above, but with mirroring and striping reversed. So drives A and C are striped in a RAID 0 setup (array #1), while drives B and D are the same (array #2), and the two RAID 0 arrays are mirrored in a RAID 1 array across sides 1 and 2.

Once again, consider that one drives fail (again, assume it’s drive A; it doesn’t matter which drive, because the concept will still apply). Since drive A is gone, that means array AC is gone also, since they were a RAID 0 array, and a RAID 0 array dies when just one of its constituent drives fails. So the system now relies on just two drives (B and D), which are also set up in a RAID 0 configuration. I think you’re starting the see the problem; if either drive B or D is the next drive to fail, then the entire hard disk array falis, so your odds are 100% that the next drive failure will disable the entire disk array (or 2 in 3 if you count the disable-but-still-functional drive C).

Either way, your odds of an array failure are lower with RAID 10 than RAID 0, with a typical RAID controller.

Hypothetically a smart, thorough, well-designed RAID controller could continue to write data to drive C after drive A failed (instead of disabling array AC as soon as one of its drives failed), effectively using it as a mirror of drive D in case D fails. In this case, overall fault tolerance is the same as RAID 10, since you have the same 1 in 3 chance of a total array failure (since drive B would be the only drive out of the 3 that could cause it). However, in practice, few (if any) RAID controllers are smart enough to do this, so using RAID 10 over RAID 01 is pretty much always the better choice, since it gives you the same speed/performance anyway, and usually greater fault tolerance.

Hope that made sense, and forgive my lousy ASCII art skills =)

-jake

This whole argument only takes into account the redundancy of DISKS.

What if a controller fails?

YOU SHOULD: stripe off of a single controller, then mirror that stripe to

an identical stripe on a separate controller. That’s a “mirror of stripes”.

This way if a disk fails, a stripe is down, the other one is still up as usual.

But what if a controller fails? In a mirror of strips, you’re still up.

In a stripe of mirrors, you’ve lost one piece of each stripe and you’re

down. Pray to never have a controller failure for 10.

Conclusion: 01 is better in all cases.

so you say you’ve got 1 RAID controller for everything? That’s even worse.

Best is a mirror of stripes created such as you would do within Solstice Disksuite,

using separate controllers for each stripe to hang off of.

Also the examples you give are unnecessarily confusing, and you introduce the

concept of a “group”. There are mirrors and stripes, nothing more.

You don’t need the letters “A-F” for any purpose I could see.

I basically agree with a comment from Ven bask on Sep 24 2013.

Think of striped data as one one storage unit/group composed of 2 or more disks for performance and/or extra storage. (The 0 in Raid 10/01)

Think of a mirror as having a mirror image (hot backup) to immediately use. (The 1 in Raid 10/01) Failed mirror must be resynced before fully mirrored again. Assume only one mirror… overhead of multiple mirrors and extra redundant storage is probably prohibitive.

Raid 01: Each storage unit/group is mirrored – identical striped data on a second storage unit/group. Individual disks are not considered mirrored but there should be a disk with identical data in the other unit/group. Any one disk fails in a storage unit/group and that one is down. The remaining data on the remaining disks in the unit/group becomes stale and not suitable for normal recovery. (Unlikely RAID/system can continue to partially mirror the stripe). Drives in the failed storage unit/group are no longer active. A failure of one still >activeactiveanother< mirrored pair does not bring system down. However, additional failure in already failed mirrored pair of disk before failure has been recovered (resync/resilver the 1 replacement disk) brings down the storage unit/group and the system.

Raid 10 would have faster recovery times from a failed disk – 1 disk to resync vs. 2+. Also could survive a second disk failure if not the mirror of the failed disk.

Larger systems could have storage subsystems with mirrored disks and mirrored (or hot backup) subsystems but that may not be considered a RAID level. (101?)

—update—

Something got dropped in the middle of that long post…

RAID 01 … A failure of any one of the still >active< drives in the remaining storage unit/group before failed mirror has been recovered (resync/resilver the failed stripe (2+ drives) does bring system down. May be worth using if separate controllers for each stripe (storage unit/group) is important.

RAID 10 would be a storage unit/group composed of striped data on 2 or more mirrored pair of disks. A failure of one drive in any mirrored pair does not bring system down. …

Nice explanation which made me to understand easily.

Thank you Ramesh, that makes the RAID 10 versus RAID 01 very clear as I understood it to be the opposite configuration of the groups versus the stripes. I can now understand the group itself contains the first of the data stripes as well as the mirror of the disk. My concern was the ability to rebuild the data with the loss of one drive in each set as I can see the RAID 10 has a higher fault tolerance to multiple drive failures, being all new, good quality drives will leave the odds very low to failure at any given time and the odds decrease very rapidly for multiple drive failure at the same time becomes very low. You very clearly expressed the differences of the two configurations for me and I’m sure any with the same question. Good Day to you and Happy Computing to all.

How come everyone is so hell-bent on *their* scenario for failure being the biggest problem? If you have replication across multiple machines, then there is an additional possibility of failure of the machines – this is not a RAID failure! It is the same as arguing that the 6 (as in the diagrams) hard drives are stacked vertically and a small (yet armed) child is more likely to drill holes in the hard drives at the very top and bottom, so a solution which allows for these to fail is better than one which doesn’t. Circumstantially there may be advantages to one or the other, but these are linked to said circumstances. Likewise, if the RAID controller deems a group failed because 1 drive failed this is a badly designed controller, and nothing to do with the theoretical RAID.

Here’s hoping everyone predicted (and protected against) the actual problem they end up with!

Steven,

Apologies but I completely disagree with you last sentence. This whole thread started with a discussion about the difference between Raid 1+0 (10) and Raid 0+1 and there are distinct differences in the availability profile of each solution and performance profile although niche corner cases (will not discuss more here). When a designer comes to implement their solution they weigh up those difference and advantages and choose the best implementation for their requirements.

So choosing a solution (0+1) that deems a group failed because1 drive failed is not bad design if availability is not your primary concern, It probably is a bad design if availability is your primary concern.

Nice Presentation about Raid01,Raid10 but i have some doubts here

1.As you said If Disk1,Disk3,Disk5 from each group then the Raid10 still will be functional I agree with you.But if Disk1 and Disk2 are fail in the same disk group the what will happen ?

And coming to the RAID01 here even if one disk fails or two disks are fail or 3 disk are fail in the same disk group1 or Disk group2 However the no of disk failures but the Raid 10 will be down.For this post i agree with you

Hey guys. Just wanted to explain it how I understand in case anyone doesn’t get the above comments .

Think of it like this:

You have 1 hard drive. It needs to read a,b,c and d. It does this at a rate of 1letter per second. It takes 4 seconds for all the letters to be read.

In raid 10 you have 4 hard drives seperated into 2 groups of hard drives . Group 1 contains drive 1 and drive 2. Drive 1 contains letters a and b which it reads at 1 letter per second. Drive 2 is a copy of this so it also reads at 1 letter per second, however these speeds do not add together to read a and b at 2 letters per second, drive 2 is more like a backup. This means letters a and b take 2 seconds to be read. At the same time group 2 (containing drives 3 and 4) reads letters c and d. Drive 3 reads letters c and d at 1 letter per second, and drive 4 is identicle and a sort of backup. This means letters a and b are read in two seconds and because this is done at the same time as group 1 reads a and b (read In 2 seconds) letters a,b,c and d are al read in 2 seconds. 2x faster than one hard drive.

Raid 01 also reads a,b,c and d in 2 seconds. However, in group 1 (consisting of drive 1 and drive 2) drive 1 reads letters a and b and drive 2 reads c and d. Group 2 is a copy of this.

The main difference are that in raid 10 drives 1 and 3 could both fail and the system would still run because drives 2 and 4 would take over. This means that drives 1 and 3, 1 and 4, 2 and 3 or 2 and 4 could both be down, but the system would still be running as the two functional drives would take over. Whereas in raid 01 if drive 1 or 2 failed group one would fail and group 2 would take over. This means drives 1,2,3 or 4 could fail and the system would still be functional, but if anymore failed the system would fail.

PHEW! Hope it helped.

Hello Ramesh,

Now only I realise how ignorant I was and was misinforming many a

colleagues the wrong thing. Of course neither I nor any of my colleages

got a doubt. Thanks for the enlightenment.

R. GANAPATHI RAO

Hi,

If you have 4 disks with a failure risk of 10% (old crappy disks) then the failure risk of a RAID1+0 (a RAID 1 array consisting of two RAID 0 arrays) would be (10% × 2) ^ 2 = 4%

The failure risk of a RAID0+1 (a RAID0 consisting of two RAID1) would be (10% ^ 2) × 2 = 2%

I.e. a four disk RAID1+0 is twice as likely to fail as a RAID0+1…

I’m not sure how the probabilities change with more disks, but Average Joe will most likely try to use 4 disks for this type of RAID…

/E

There is no fault tolerance in both RAID levels.. I can prove that..

Thank you for a clear and simple explanation.

One Question: Regarding the fault tolerance..

Q. What if (in RAID 10), both disks in any of the three groups fail? i.e., Disk 1 & 2 fail in group 1 OR Disk 3 & 4 fail in group 2 OR Disk 5 & 6 fail in group 3, then what? Will the system fail.. or not?

Awaiting your reply.

Please do ASAP.

Thank you!

@ AF

“Q. What if (in RAID 10), both disks in any of the three groups fail? i.e., Disk 1 & 2 fail in group 1 OR Disk 3 & 4 fail in group 2 OR Disk 5 & 6 fail in group 3, then what? Will the system fail.. or not?”

Yes. If BOTH drives in any of the the 3 mirrors in the RAID 10 illustration fail, the array becomes inoperable.

@ Remo

So, in that case, RAID 10 wouldn’t be an ideal solution for data safety. Which RAID would you recommend then? And why?

And also, I’d you want both data safety and performance. Then which one? And why?

Awaiting your reply..

Thank you!

– A.

The logic is good, Ken.

On raid 10, each mirror set is 2 of the same drive, and your controller can and will use them interchangeably. Disks 1, 4 and 5 could all fail, and the raid would run. You could pop in new drives, and it would repopulate.

On raid 01, the mirroring is /after/ the striping. You have 2 striped sets. When one drive fails in a striped set, the whole set is shit. Your controller cannot substitute disk 2 for 5 in a raid 01. If disk one and five both fail, the raid is toast.

Erik, you’ve gotten 01 and 10 reversed.

If you first stripe (0) then mirror (1) it’s 01. (a RAID 1 array consisting of two RAID 0 arrays)

If you first mirror (1) then stripe (0) it’s 10. (a RAID 0 array consisting of two RAID 1 arrays)

It’s strange to see gundreds of nerds trying to calculate probabilities to try to prove incorrect interpretations caused by this somewhat unclear article. Endless discussion and not a single person who understands what they’re talking about, and no clear conclusion or consensus. Geeze. It’s actually very simple and I will explain it to you all in simple terms without any need for calculating a single probability.

It is THIS simple:

* First, you must understand: The maximum possible storage space in both RAID 10 and RAID 01 is 50% of the total size of all disks.

* In RAID 10, this comes automatically, as you set up the RAID 0 on top of any number of individual two-disk RAID 1 (mirror) pairs. The mirroring in each pair is what halves the total available storage space since half the disks are used for redundancy.

* In RAID 01, you set up a RAID 1 (mirror) at the TOP LEVEL instead, and since mirroring halves the available storage space, this means that to get the maximum possible storage space (50% of the total disk capacity), you must create only TWO RAID 0s, by putting half of the disks in each RAID 0, and then mirroring across those RAID 0s, thus giving you the optimal 50% capacity.

* To put this into numbers: For a 24 hard disk example, the maximum, most efficient storage space layout would be: RAID 10 = A single RAID 0 stripe consisting of 12 x two-disk RAID 1 mirrors. RAID 01 = A single RAID 1 mirror consisting of 2 x TWELVE-disk RAID 0 stripes.

* Do you see the problem yet?

* If a single disk dies in RAID 10, the other disk in that particular two-disk mirror is still chugging along, and ALL other mirror pairs in the whole array are perfectly fine.

* If a second disk dies in RAID 10, you are still perfectly fine, as long as the failure is in a disk in another one of the two-disk mirror pairs. You can keep losing loads of disks and as long as you never lose both disks in the same mirror-pair, you lose NO data.

* If a single disk dies in RAID 01, ALL TWELVE DISKS IN THAT TWELVE-DISK RAID 0 STRIPE GO OFFLINE, leaving only a SINGLE REMAINING RAID-0 twelve-disk stripe alive.

* If a second disk dies in RAID 01, you are done. All data is lost forever. Goodbye RAID.

* It gets even worse for RAID 01: Let’s say you ONLY lost a single disk and the other RAID 0 is still running fine. Well, all data on your offline RAID 0 is now out of sync as further writes have taken place on the online RAID 0. So when you insert a new disk and being the second RAID 0 back online, it must now erase ALL TWELVE HARD DISKS in the briefly-offline RAID 0 array that broke, and instead fill (re-mirror) them with the latest contents from the disks in the other RAID 0 stripe. This takes an EXTREMELY long time (copying from 12 disks to 12 disks) and puts extreme stress on the remaining healthy RAID 0 and probably kills it too before the re-mirroring can complete.

* In RAID 10, replacing a single disk is easy and safe regardless of how many hard disks are in your array: It just needs to re-mirror a single replacement disk from its mirror twin. This is fast (just a 1-to-1 copy) and SIGNIFICANTLY less likely to fail than the 12-to-12 copy in the RAID 01 example.

* These differences are true even in simple consumer-level 4-disk arrays: If you lose a single disk in such a RAID 10, you only need to re-mirror as a 1-to-1. If you lose a single disk in such a RAID 01, you need to re-mirror as a 2-to-2 to restore the de-synced mirror. And if you lose 2 disks in a 4-disk RAID 10, you can get lucky and only lose one from each mirror pair, which means all data is still safe. But if you lose a second disk in a RAID 01, you kill the only remaining RAID 0 and thus lose all data.

* In short: RAID 01 is only used by clueless people who have no idea how extremely dangerous it is. It doesn’t matter how many hard disks you have in RAID 01; lose two disks and all data is lost, BAM! In RAID 10 you can lose up to half of the disks and as long as none of the two-disk mirrors are totally destroyed you will never lose data. And as mentioned, repair is never more than a simple 1-to-1 copy.

* There is no performance reason to choose RAID 01 either. Performance between RAID 10 and RAID 01 is identical: Both have NX read and NX/2 write performance, where N is the number of disks and X is the individual disk read/write speeds.

There you go. Feel free to delete the hundreds of useless comments before this one. 😉

This is not because you don’t understand the 10 lines of maths that shows exact same result as you explain in 50 lines, that the comment is useless…

Well guys, I got a question here. If a whole group in RAID10 fails (ex. Group3) your whole RAID will fail, instead of RAID0+1, if your whole Group2 fails, you’ll still having those blocks mirrored. Is it right? Regards!

To add my 5 cents.

RAID 10

Every 2nd disk can fail, meaning 3 disks can fail, but data is still intact.

RAID 01

First subsequential 3 disks can fail while data is still intact.

So in theory redundancy is the same.

BUT:

Inside RAID 10 when you loose Disk 1, then its mirrored copy on Disk 2 is under pressure and it is more likely next failure will occure on Disk 2, meaning you will loose DATA “A” and “D”

While in RAID 01 when you loose Disk 1, then the whole stripe “Group 1” is defunct and not under pressure. The other Group 2 is then under pressure and there is equal chance for disks in 2nd group will fail. So in our case only 30% chance there is for the next failure to occur on 1st disk of Group 2 to loose only DATA “A” and “D”. Any other disk of 3 remaining should fail would mean, that beside DATA “A” and “D” we will loose additional data.

With other words:

– when 1 drive fails in RAID 10, you still have 2 mirrored groups and 1 degraded group,

– when 1 drive fails in RAID 01, you are left with one single RAID 0 no-redundancy group, which is bad. Nothing is mirrored anymore.

Therefore redundancy in case you count it by “how many disks I can pull out” IS the same, but if you take into account how RAID arrays works, RAID 10 is definitely more resilient against failure than RAID 01.

ALSO when rebuilding failed drive, RAID 10 has to re-mirror only 1 disk, while RAID 01 will rebuild whole mirrored GROUP, in our case 3 disks, which means more stress and more time to rebuild.

So, WHAY DOES RAID 0+1 EXIST?

Trully, it is mostly implemented by mistake, maybe sys admin considering it faster (which is NOT true) and implemented RAID 0+1 because he CAN, not because there would be ANY reason for him to do it.

On NETWORK level there is, somehow, reason to implement RAID 0+1 or even 6+1 using DRBD or some other “over-the-network” syncing protocol to replicate local RAID 0 or RAID 6 servers. In this scenario each local copy should hold the WHOLE data set, meaning the whole stripe of all data, and mirror it to the other side across the network. This is maybe the only implementation of RAID 0+1 which makes any sense at all.

Just one more question (perhaps stupid, but…)

What if a single file (or a few files) on both the regular drive and the mirror drive (in either RAID10 or RAID01 setup) becomes corrupt, but the drives themselves are still functioning, will the entire array then also be lost, or do you just get a read error on that single file, while the other files are still readable?

To be practical, I have a client with a Raid 1+0 configuration as explicitly reported by the Acutool , but the config says 2 mirrorgroups of 6 disks.

As I understood this discussion it should have been represented as Raid 0+1 , since there are only 2 mirrorgroups over 12 disks. Mirror of stripes so to speak.

I then suspect that the raid controller will self decide how to deal with broken disks , no matter the definition.

Or it is Schrodinger cat 🙂

First, this is a very nice article. Thank you for it.

Now my question. Above you said:

“… In the above RAID 01 diagram, if Disk 1 and Disk 4 fails, both the groups will be down. So, the whole RAID 01 will fail. …”

That is a clearly correct statement … however the more important question is this one. Could you confirm that this statement is also true?:

–> In the above RAID 01 diagram, if Disk 1 and Disk 5 fails,

–> both the groups will be down. So, the whole RAID 01 will fail.

Thanks for clarifying.

— Duane

Great Article!. Finally I cleared my doubts about these 2 RAID groups. Thanks for sharing!

Although, I am confused about this one for RAID 1+0:It requires minimum of 3 disks. But in most cases this will be implemented as minimum of 4 disks.” Not sure how?

I was curious about the RAID 10 vs RAID 01 discussion. For a single drive failure both RAID systems survive. What is interesting is what happens with a hypothetical TWO drive failure. The math of combinations tell us that to calculate the number of two drive combinations out of a set of six drives is (6_C_2) calculated is 6!/(6-2)!x2!=15 possible combinations. What is interesting is if you enumerate the two drive failures in both the RAID configurations you discover that in the RAID 10 case of the 15 pairs of failed drives, 12 pairs leave the array working while 3 pairs cause it to fail. In the RAID 01 case, of the 15 pairs of failed drives, 9 pairs leave the array working while 6 pairs cause it to fail. So for in this example, assuming a two drive failure, the RAID 10 is twice as reliable (½ the failure rate) as the RAID 01.

@Ramesh: thank you for raising up a so controversial discussion 🙂 I arrived there through a link from Spiceworks about RAID solutions

@Robert F. Brost: for the RAID 01 implementation as described by Ramesh, only 6 pairs leave the the array still working. See details from post left by Michael Michael on October 12, 2012, 12:11 pm here below:

“In case this has not been settled yet, here is every possibility for a 2 drive failure:

Drive R 0+1 R 1+0

1,2 Up Down

1,3 Up Up

1,4 Down Up

1,5 Down Up

1,6 Down Up

2,3 Up Up

2,4 Down Up

2,5 Down Up

2,6 Down Up

3,4 Down Down

3,5 Down Up

3,6 Down Up

4,5 Up Up

4,6 Up Up

5,6 Up Down”

Cheers from France

Frankly when you don’t use RAID10 itself and there are reasons for: https://bugzilla.kernel.org/show_bug.cgi?id=194551 you would just setup first two RAID1 and *on top* a RAID0 to stripe the stuff and have the same as a RAID10

you can lose a single disk on each of the RAID1 just because both are mirrored and the RAID0 on top don#t care for the disks itself but for both stripes which are there as long each of the underlying RAID1 has at least one disk

What will be the 3-disks raid 01 configuration? (as mentioned above).

>> It requires minimum of 3 disks.

And by the way, raid 10 can be built with 2 disk only (mdadm can do it). It will write as Raid 1 and read as raid 0.

The example you have given is artificial. In this scenario yes performance is exactly the same in both configurations so clearly choose RAID 10.

But RAID 10 can be used to increase write performance over RAID 10. All you need to do is increase the size of the strip set to say 5 disks in both mirrors and you have achieved 5x write speed.

RAID 10 is good for a workload where you have heavy data ingestion and where the written data only resides in the array temporarily. It’s not a typical scenario but it’s highly effective for logging or incoming stream data that is then reduced or transformed.

I don’t think author put enough effort to help everyone understand why raid 10 has better fault tolerance than raid 01. Here is a picture that will exactly explain why. To make it short and simple when you mirror something it won’t necessarily be copied in the exact order. here